MiniCPM-V

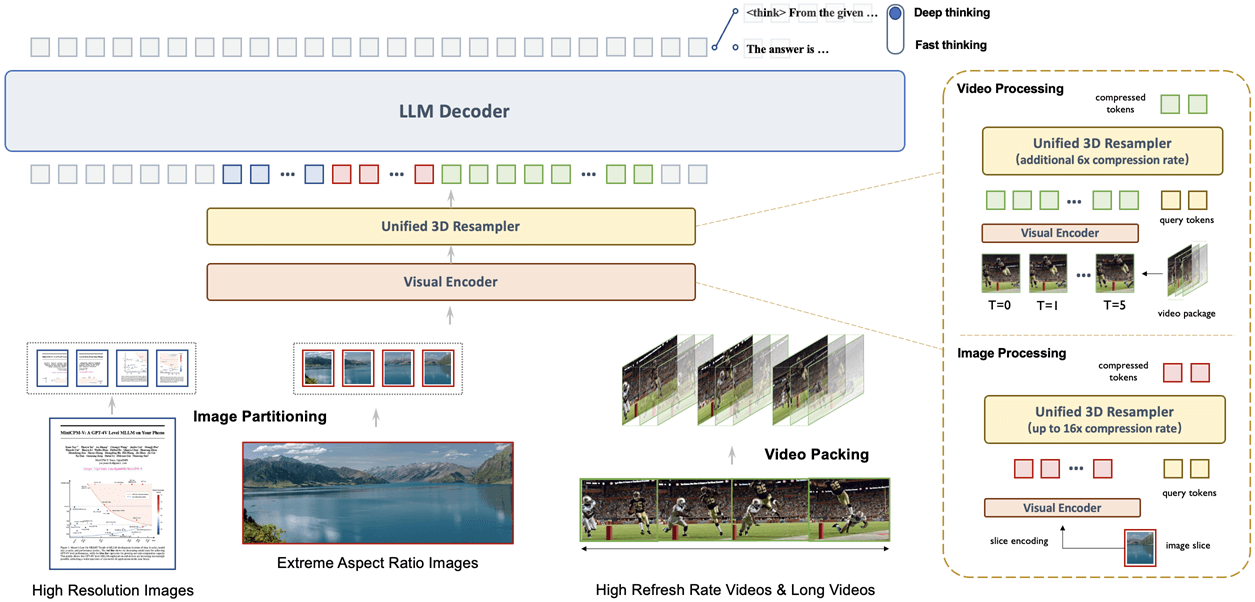

MiniCPM-V is a series of efficient end-side multimodal LLMs (MLLMs), which accept images, videos and text as inputs and deliver high-quality text outputs. MiniCPM-o additionally takes audio as inputs and provides high-quality speech outputs in an end-to-end fashion.

Guys, this newly open-sourced project from Tsinghua University has officially outperformed GPT-4o! With only 8 billion parameters, it delivers results that outshine 72B models—and it can run entirely offline on your smartphone. Snap a photo of an English document for instant translation, capture handwritten notes to convert them into editable text in seconds, or upload a long video for automatic summarization. Key highlights: support for 30+ languages with seamless switching, a 96x compression ratio for maximum efficiency, and versatile voice capabilities—real-time Chinese-English dialogue, adjustable emotion and speech rate, voice cloning for dubbing, and full open-source access for free commercial use. Most impressively, all these features run natively on your phone. Previously, similar tools came with a hefty price tag and privacy concerns, but Tsinghua has made it openly available for unrestricted use—even for commercial projects. Interested developers, don’t miss out—give it a try today!

Ultralytics YoloV5

As a core field of AI vision, object detection can accurately identify and locate targets in images or video frames. Since the emergence of YOLO, this field has undergone a revolutionary transformation.